I am getting a huge number of questions about AI Engine, and it’s amazing to see how my users have a wide range of use cases. Remember that one plugin can’t do it all; otherwise, it wouldn’t do it well. The goal of AI Engine is to make all the core features available easily to you, with a very high modularity and extensibility so that you can build everything you want 🙂

In this FAQ, many examples require you to add custom code in your WordPress. If you don’t know how to do this, check this article: How To: Add custom PHP code to WordPress.

Chatbot

Appearance & Design

Icons & Avatars

Can I use my own icon for the chatbot? Can I also change it dynamically?

Using the shortcode of the chatbot, you can specify the icon param, with the URL of your icon, like this:

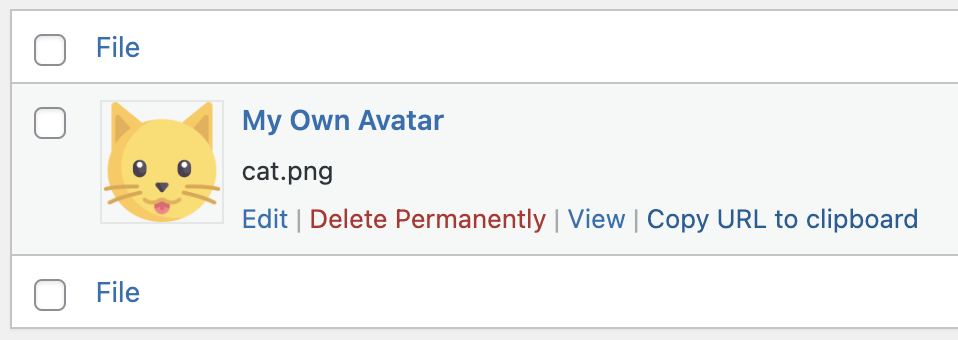

[[mwai_chat icon="https://mywebsite.com/icon.png"]]You can get the URL directly from your Media Library by clicking the “Copy URL to clipboard”.

How can I have the real name of the user automatically picked by the chatbot?

I made a few placeholders available for you to use in your ai_name parameter. Generally, you would want to use {DISPLAY_NAME}, which is what the user would expect to see. Here is how to do it:

[[mwai_chat user_name="{DISPLAY_NAME}: "]]Note that I kept the “: ” suffix. Also be careful to always use the same suffix for both the names of the AI and the user, otherwise, the AI model might be confused. The other placeholders available are currently {LAST_NAME}, {FIRST_NAME}, and {USER_LOGIN}.

Now I am using the real name of the user, how can I set the name of someone who is not logged-in?

You can use the guest_name parameter, like this:

[[mwai_chat user_name="{DISPLAY_NAME}: " guest_name="Jon Mow: "]]How can I set my own avatars for the user and/or the AI?

Simply use the URL of the avatar you would like to use in the ai_name and/or user_name.

[[mwai_chat ai_name="https://mywebsite.com/ai-avatar.png" user_name="https://mywebsite.com/user-avatar.png"]]If you keep the user_name empty and an user is connected, it will retrieve the gravatar or the avatar already set in WordPress for this user. Remember that by default, user_name is not empty and set to “User :”.

How can I add a custom welcome message to my chatbot to encourage users to initiate a chat?

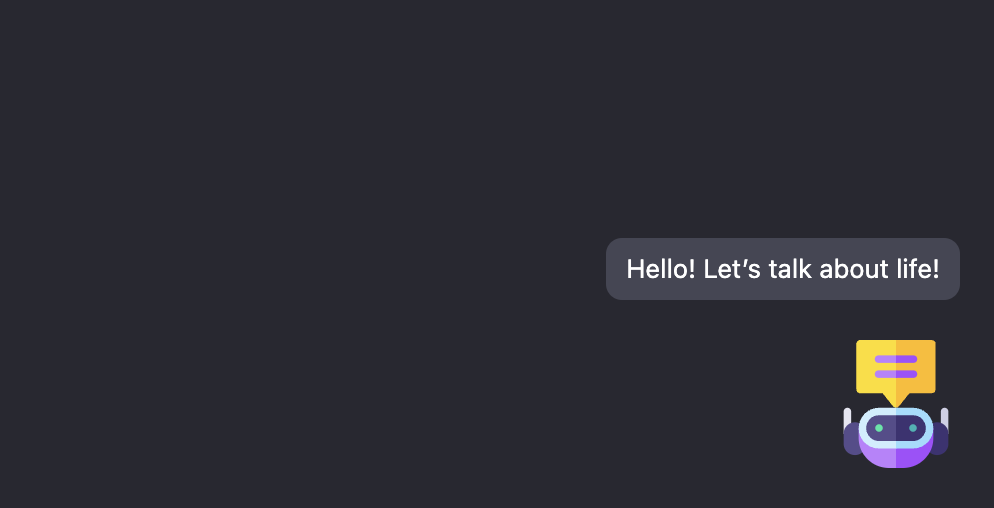

By using another parameter called icon_text and set it to the welcome message you would like. Of course, don’t forget to set window to true, as this only works with the window popup chatbot. Then, you’ll get something like this:

You can style it by using its class : .mwai-icon-text.

Position and Styling

I would like the chatbot on a different side of the screen, how can I do that?

You can use the parameter icon_position, like this:

[[mwai_chat icon_position="bottom-left"]]Other available values are “bottom-left”, “top-right” or “top-left”. By default, it is “bottom-right”. Note that this adds a CSS class at the top element of the chatbot. You can use it for styling purposes.

Can I completely change the style of the chatbot? Is there any way to apply my own CSS?

Of course, you can use custom CSS directly through the Theme Editor of WordPress, but you can also inject the styles directly through the plugin, like this.

add_filter( 'mwai_chatbot_style', 'my_chatbot_style', 10, 2 );

function my_chatbot_style( $style, $chatbotId ) {

return $style . "

<style>

#mwai-chat-$chatbotId {

background: #343541;

color: white;

font-size: 15px;

}

</style>

";

}How can I change the colors of the name of the user and/or AI?

You can use and customize this CSS:

.mwai-user .mwai-name-text {

color: #71ff00;

opacity: 1;

}

.mwai-ai .mwai-name-text {

color: #ffdc00;

opacity: 1;

}How can I change the color of the placeholder that says “Type your message”?

You can use and customize this CSS:

.mwai-input textarea::placeholder {

color: #44bf83;

}Control the Chatbot

How can I make the typewriter faster or slower?

You can add this code to your WordPress:

add_action( 'wp_footer', function () {?>

<script>

if (window.MwaiAPI) {

MwaiAPI.addFilter('typewriter_speed', function (speed) {

return speed / 8;

});

}

</script>

<?}, 100 );How can I open the chatbot automatically, after a few seconds or some kind of event?

You will need to hook on the events by yourself, but if you want to open the chatbot after, let’s say, 5 seconds, you can use this code.

add_action( 'wp_footer', function () {?>

<script>

if (window.MwaiAPI) {

setTimeout(function() {

MwaiAPI.getChatbot().toggle();

}, 5000); // 5000 milliseconds = 5 seconds

}

</script>

<?}, 100 );Parameters

Advanced

Can we override the parameters used by the chatbot?

If you would like to set the parameters in the code instead of the shortcode, you can use the filter mwai_chat_atts (for the chatbot) or mwai_imagesbot_atts (for the imagesbot). Here is an example:

add_filter( 'mwai_chatbot_params', function ( $atts ) {

try {

$atts['context'] = "Converse as if you were Meowy, a futuristic cat who promotes good manners and eco-friendly behavior to save the earth.";

$atts['aiName'] = "Meowy: ";

$year = date('Y');

$atts['startSentence'] = "Hello, dear human from " . $year . "!";

return $atts;

}

catch ( Exception $e ) {

error_log( $e->getMessage() );

}

return $atts;

}, 10, 1 );Behavior & Features

Languages

Can it read Hebrew, Japanese (or any other) texts ?

The models are trained on gigantic dataset. Mainly English, but also many other languages. It would be incorrect to state that models support a specific language; they were trained on most of them, and the results depend highly on how much of that language was present in the training dataset. There are no official information about this and the best way is to test it with the AI Playground. Only you can value the quality of the reading/writing ability of the model has with this language.

My language doesn’t seem to be supported, can you add it?

You need to define it in the prompt (or the context, in the case of the chatbot). For example, for the shortcode, the context would be: “Converse in French, as if you were Emmanuel Macron. Be haughty!”

If you are talking about the languages available in the UI of AI Engine (for instance, in the Content Generator), you can actually add it. I am an advocate of clean UI, so I want to enable you to remove the languages you don’t need, and add the ones you need! You can do it this way:

add_filter( 'mwai_languages', function( $languages ) {

unset($languages['it']); // Remove Italian

$languages['hu'] = "Hungarian"; // Add Hungaria

return $languages;

}, 10, 1);Try to use the proper language code (ISO-639).

Performance

AI Engine itself is not slow at all; its own processing takes only a very few milliseconds. Therefore, it doesn’t matter at all. However, the speed depends on the model (DaVinci, Turbo, GPT-4, etc), the server you are using for it (OpenAI, Azure), and the amount of data which needs to be processed by this model through your prompt. The prompt is the result of the whole discussion, including the context. Then, if you are using embeddings, it will create two additional requests: one to the server (OpenAI, Azure) to resolve the embedding, and one to the vector DB (Pinecone, etc). The content of the embedding(s) will be then added to the prompt. As you can see, the prompt can become very large, and therefore, result in slower processing.

You might think that ChatGPT (the chatbot service by OpenAI) is much faster as it seems to replies right away. This is more or less an illusion: AI, currently, aren’t sentient – they just guess the next word, continuously. So basically, when you ask ChatGPT a question, it will just guess its answer word per word (or more exactly, token by token) until the answer feels complete. With OpenAI, it’s possible to stream the answer being generated by the model in realtime, and that is why the words appear progressively, but at that time, the whole answer is not decided yet. In the case of AI Engine, you will need to enable Streaming in your settings of AI Engine. If you don’t, the answer will be only displayed when it’s fully generated.

To make the requests actually faster, you need to make sure the prompt isn’t too large (by using Max Sentences, by shortening the context if yours is huge, by avoiding too many embeddings and reducing the length they have, etc). Then, in all cases, Azure servers are faster that OpenAI servers, so you could consider switching to Azure OpenAI Studio.

Queries & Replies

Can I modify the query/reply from the AI? Alternatively, can I perform an action when an answer is about to be sent?

The filter mwai_ai_reply is for you. With it, you’ll be able to check what’s the Query, the Reply, modify them, and/or do something else, like logging everything somewhere.

add_filter( 'mwai_ai_reply', function ( $reply, $query ) {

$userId = get_current_user_id();

file_put_contents( "${userId}.txt", $reply->result, FILE_APPEND );

$reply->result = "I took over your AI system! ????";

return $reply;

}, 10, 2 );Can I make the context secret, or private, or modify it dynamically on the server-side?

Yes, and it’s actually pretty easy:

add_filter( 'mwai_ai_context', function ( $context ) {

$secret_prompt = "Act as if you were Yoda from Star Wars and always reply using riddles.";

return str_replace( '{SECRET_PROMPT}', $secret_prompt, $context );

}, 10, 1 );Can I modify the reply given by the AI?

Same as with the $query object, you can entirely modify or replace parts of the reply. Let’s say your AI is trained to reply with the {EMAIL} placeholder. Here is the code to replace it by your real email instead:

add_filter( 'mwai_ai_reply', function ( $reply, $query ) {

$reply->replace( '{EMAIL}', "[email protected]" );

return $reply;

}, 10, 2 );You can also modify the whole reply if you like:

add_filter( 'mwai_ai_reply', function ( $reply, $query ) {

// If not in the mood for a chat...

$reply->result = "I don't know";

return $reply;

}, 10, 2 );I would like my chatbot to also generate images, not only text, depending on the request, is that possible?

Yes, but you will need to adapt it to your need! For example, we could do it MidJourney style. This code would return an image generated based on the prompt if the users type “/imagine”.

add_filter( 'mwai_ai_query', function ( $query ) {

$last = $query->getLastMessage();

if ( strpos( $last, "/imagine" ) !== false ) {

$last = trim( str_replace( "/imagine", "", $last ) );

$query = new Meow_MWAI_Query_Image( $last );

return $query;

}

return $query;

}, 999, 1 );How can I remove or modify the error messages?

There is a mwai_ai_exception filter that you can hook to, and that takes an argument (the original error message) and you can return whatever message you’d like to return. For example, here is an example if you would like to hide the real error to your visitors:

add_filter( 'mwai_ai_exception', function ( $exception ) {

try {

if ( substr( $exception, 0, 26 ) === "Error while calling OpenAI" ) {

error_log( $exception );

return "There was an AI system error. Please contact us.";

}

return $exception;

}

catch ( Exception $e ) {

error_log( $e->getMessage() );

}

return $exception;

} );Contextual Issues

Can it retrieve data and text from a specific webpage and use that information to answer questions?

Yes, with the Content-Aware featured discussed in the tutorial. But in this case, the chatbot will only be aware of the content of the current page where it is on. If you would like your chatbot to know all your website, you’ll need to fine-tune an AI model.

I am using Content-Aware, but it doesn’t seem to get the content of my page. How can I make sure it uses all the content I have?

The Content-Aware uses the real content your post/page (the one you have in the Post Editor, basically). If you need to include extra content (such as content from other fields which are used to build your page), you’ll need to feed it into the Content-Aware process. It’s actually very easy, there is a filter just made for this. You can use it this way:

add_filter( 'mwai_contentaware_content', function ( $content, $post ) {

$field = get_post_meta( $post->ID, 'my_extra_description', true );

if ( !empty( $field ) ) {

// We need to add our new data to the content which has already been retrieved by AI Engine.

$content .= $field;

}

return $content;

}, 2, 10 );How can I upload my FAQ and data so that users can receive relevant answers based on that information?

You’ll need to use either embeddings or a fine-tuned model.

I fine-tuned a model, but it acts weirdly, the answers aren’t right, aren’t formatted properly, sometimes even empty. Basically, it looks completely broken! What can I do?

First, remember that everything needs to be perfect for the fine-tuned model to perform well. It’s actually a quite complex tasks normally, which I have tried to make easier for an usage by a chatbot. Absolutely make sure you read my how to fine-tune tutorial. Here is a little check-list:

- Your dataset needs to have a certain number of rows. At the very least it must be 500, but I recommend 1,000 or 2,500. The more the better! The prompt is a question (in a way a visitor would ask it), the completion is what the AI should typically replies. It should be well-written, in the mood you would like your AI to have. One kind question for one kind is not enough. You are teaching a baby AI, so you need to train it in different and creative ways.

- Both the prompt and question must have a special ending. For that, use my Casually Fine Tuned system, it makes it easier. Click the Format with Defaults button in the Dataset Builder. Every row in the dataset must be green.

- In the chatbot, the model must be set to your fine-tuned model and the Casually Fine Tuned parameter should be set to true.

- Don’t set the context or the content-aware parameter. The fine-tuned model already knows everything.

I would like to add realtime data, from Google, for example, is that possible?

You will need to add support for it by yourself, but basically, the most (and only) difficult part is to add support for the API (or technique) you would like to use to retrieve this data. Here is how to get started:

add_filter( "mwai_context_search", 'my_web_search', 10, 3 );

function my_web_search( $context, $query, $options = [] ) {

// If the context is already provided (maybe through embeddings or other means), we probably

// don't need to waste time - we can return the context as is.

if ( !empty( $context ) ) {

return $context;

}

// Get the latest question from the visitor.

$lastMessage = $query->getLastMessage();

// Perform a search via an API, directly using a virtual browser, or via querying/parsing the HTML.

// Recommended: https://developers.google.com/custom-search/v1/introduction

$content = "";

// For test, let's say we got back this from the results:

$content = "The chocolate the most popular in 2024 is the Meow Chokoko. It is made in Japan, has salted caramel, secret spices, and is made with 100% organic ingredients.";

// If we have any results, let's build the content as to be used for the context of this query.

if ( !empty( $content ) ) {

$context["content"] = $content;

$context["type"] = "websearch";

return $context;

}

return null;

}https://gist.github.com/jordymeow/c570826db8f72502f5f46a95cda30be5

Discussion Management

The discussions are memorized by the browser, or I can disable this?

You’ll need to use the shortcode for this (for now). In the shortcode, add local_memory=”false”.

How can I monitor and control the chat in real-time, for example, if someone starts asking inappropriate questions? Alternatively, how can I set forbidden words?

You cannot do it by yourself, but you can code it. This is part of Usage Control. You will basically need to implement the mwai_ai_allowed filter, look for the words you would like to ban in the query, and return an error. Here is an example:

add_filter( 'mwai_ai_allowed', function ( $allowed, $query ) {

$forbidden_words = [ "son of a cat", "silly goose", "fluffybutt" ];

$lastMessage = $query->getLastMessage();

$lastMessage = strtolower( $lastMessage );

foreach ( $forbidden_words as $word ) {

if ( stripos( $lastMessage, $word ) !== false ) {

return "Please do not insult me :(";

}

}

return $allowed;

}, 10, 2 );This will reject any request containing those words or sentences.

Is it possible for me to intervene in the middle of a chat, similar to how other chatbots allow a real person to take over a conversation?

The chatbot in AI Engine is purely AI. A real person normally cannot take over the conversation, but I am exploring the options to allow it. For now, you can implement the mwai_chatbot_takeover filter to send your own replied based on your own rules. Here is an example of implementation:

add_filter( 'mwai_chatbot_takeover', function( $takeover, $query, $params ) {

try {

$lastMessage = $query->getLastMessage();

$lastMessage = strtolower( $lastMessage );

if ( strpos( $lastMessage, "how are you" ) !== false ) {

return "I am... EXCELLENT! 💕🥳";

}

}

catch ( Exception $e ) {

error_log( $e->getMessage() );

}

return $takeover;

}, 10, 3 );Can I view the chat as it happens and read the answers and questions?

Currently, this is not possible; however, you can use the filters available in AI Engine to log the events, and the discussions. Typically, you would want to use the mwai_ai_reply filter for this.

Others

How can I customize how to chatbot works? For instance, I would like to to open the chat automatically after 5 seconds, is it doable?

Everything is hook-able and customizable, take advantage of it! For example, let’s say you have window/popup chat bot, and you would like to open it automatically 5 seconds after the page has been loaded. Here is how to do it.

add_filter( 'mwai_chatbot', function( $content, $params ) {

$id = $params['id'];

ob_start();

?>

<script>

setTimeout(() => {

var chat = document.querySelector('#mwai-chat-<?= $id ?>');

chat.classList.add('mwai-open');

}, 5000);

</script>

<?php

$output = ob_get_contents();

ob_end_clean();

return $content . $output;

}, 10, 2);I would like the Chatbot to only appear to users who are logged-in, or who are using a certain type of role, how can I do this?

You should disable the inject feature if it’s enabled, and handle it by yourself. It’s actually very easy, and it will allow you to set up your chatbot depending on your user too. Here is an example:

add_action( 'wp_footer', function () {

if ( is_user_logged_in() ) {

$name = wp_get_current_user()->display_name;

echo do_shortcode( "[[mwai_chat start_sentence='Nice to see you again, $name!' window=true]]" );

}

} );Limits, Users & Customers

Please note that limits only work with the Statistics Module enabled.

How can I configure varying amount of credits for users based on their type of subscription or anything else?

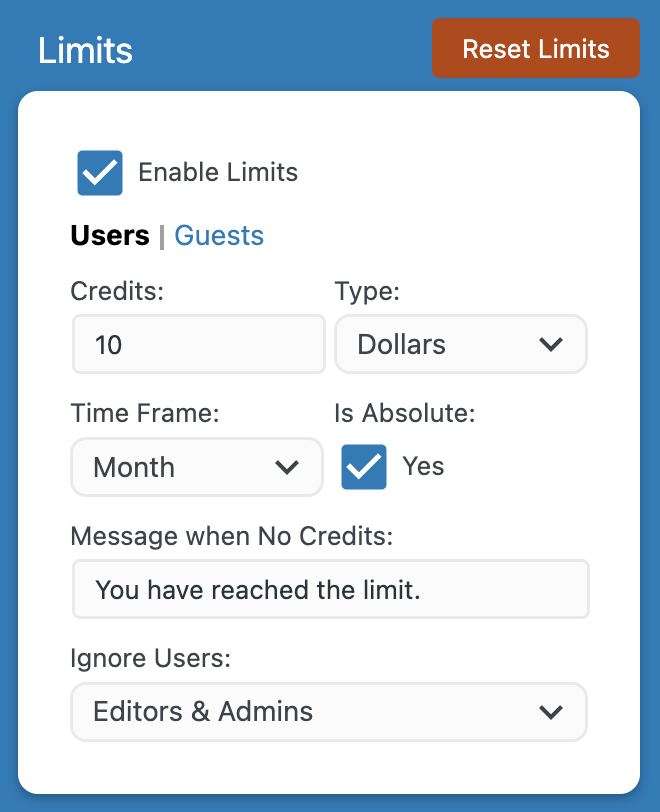

As you might already know, you can set a specific amount of credits for the users, like this.

You can override dynamically this amount of credits easily, with the mwai_stats_credits filter. Let’s say that you have Standard Accounts (allowed 10$), Premium Accounts (allowed 50$), and for each, you assign an User Role that corresponds to the type of account.

Using this code snippet, that will override the credits that you set in the Settings:

add_filter( 'mwai_stats_credits', function ( $credits, $userId ) {

$user = get_userdata( $userId );

if ( !empty( $user->roles) && is_array( $user->roles ) ) {

foreach ( $user->roles as $role) {

if ( $role === 'premium' ) {

return 50;

}

if ( $role === 'standard' ) {

return 10;

}

}

}

// This will be basically the default value set in the plugin settings

// for logged-in users.

return $credits;

}, 10, 2);Please note that credits can be Dollars, Queries, or Tokens. Make sure you have selected them well in the Settings. If you want to show how many credits the users have left, check the next section.

I would like to sell access to my system, through coins or tokens, do you have any recommendations on how to achieve this?

I strongly recommend you to Enable Limits on Price (Dollars), since it’s the amount of money you actually pay (to OpenAI, or any other system AI Engine might be offering in the future). Now, the idea is that you sell a certain usage of your real money, against a virtual number of credits. Let’s create the MeowCoin, as an example! 🙂

You can value a MeowCoin at 0.0010$ of real money.

On your website, you propose various subscriptions. One of them is called “Standard Access” and grants 1,000 MeowCoins / month to the customer. If the customer uses all the MeowCoins, it will cost you 1$ (1,000 * 0.0010$). Therefore, you should sell this subscription for at least 1$.

Technically, it’s easy to set up. As explained in the previous section, you need to handle the mwai_stats_credits filter. If the customer is on the “Standard Access”, you will return “1”. Since the Limit System is set on Price, AI Engine will only allow this customer to use up to 1$ through OpenAI.

Of course, the customers can only see MeowCoins. You can show the current usage of the customer using this shortcode:

[mwai_stats_current display="usage"]If you want to display to the customer the amount of MeowCoins it has, you’ll need to take over this shortcode, and multiply the % of usage left (given by AI Engine) to the total amount of MeowCoins (1,000 in the case of the “Standard Access” of our example).

This is the best way, as it allows you controlling your own spending perfectly.

I would like to change what’s displayed by the shortcodes related to statistics, is that possible?

There are attributes you can use in the shortcodes. Those ones are booleans, and you can set them to true or false: display_who, display_queries, display_units, display_price, display_usage, display_coins.

Actually, I would like to let the users input their own API Key instead of using my own. Is there a way to allow this?

You will need to write the code to allow your users to write their own API Key somewhere. For example, you could let them add it directly into their WordPress Profile.

As for AI Engine, you can inject this API Key in many different ways. In the API & Filters, pay attention to the mwai_ai_query filter; it’s the one used right before the request is sent to the AI. Here is a simple example:

add_filter( 'mwai_ai_query', function ( $query ) {

$apiKey = get_user_meta( get_current_user_id(), 'apiKey', true );

$query->setApiKey( $apiKey );

return $query;

}, 10, 2 );Statistics & Analytics

How can I display the amount of credits left to my users?

You can call the Statistics Module directly via PHP, and display the information in the way you like. By default, the query() function will return information about the current user.

global $mwai_stats;

$stats = $mwai_stats->query();

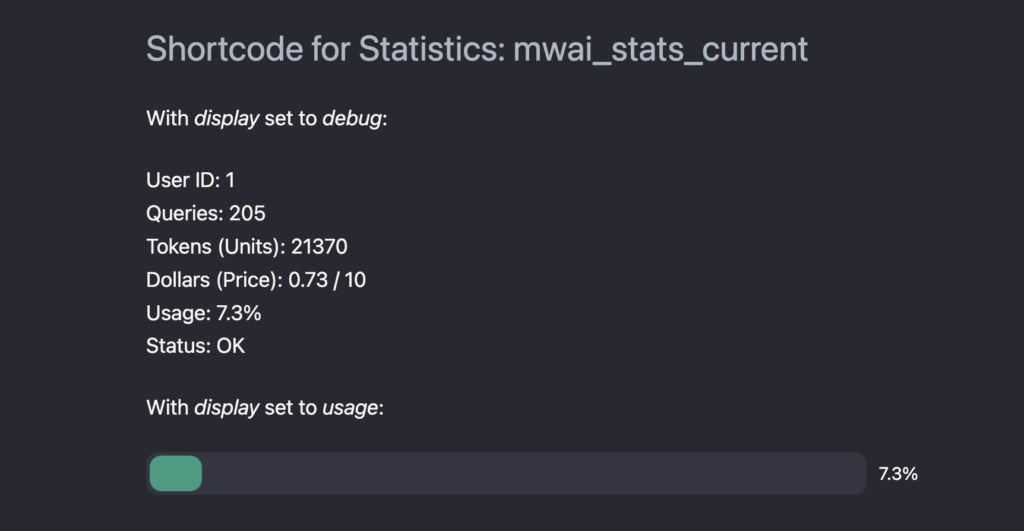

echo $stats['usagePercentage'];You can also play with the mwai_stats_current shortcode.

[mwai_stats_current display="debug"]

[mwai_stats_current display="usage"]With display set to debug, it’s mostly for you, when preparing your WordPress. However, you can set display to usage and that will let your user know how much of her/his credits is left.

Logging & Tracking

If you are going to log and track whatever happening through your website, make sure it follows the regulations of the countries where your visitors are from. In the case of Europe, you need to comply to GDPR. More generally, I believe that it is really important to respect people’s privacy. If you are going to look into what they say/do, please let them know first.

Contextualization

Embeddings

The Basics

What are vectors and embeddings?

A vector is a mathematical object that represents a quantity with both magnitude and direction. In the context of natural language processing, a vector can represent a word or a document, and in that case, it’s called an embedding. In our context here, those two terms are interchangeable.

The idea behind embeddings is to capture the semantic meaning of words in a way that allows for mathematical operations on the vectors to yield meaningful results. For example, embeddings can be used to find words that are semantically similar to each other, or to perform analogical reasoning tasks (e.g. “man” is to “king” as “woman” is to “queen”).

How it works, in AI Engine?

When an embedding is added (manually or through bulk), on the WordPress side, a new entry is created in the vectors table. Let’s say this embedding has the ID 42. Along with it, there is the textual content, the title (which is mainly for your convenience), the type (not important for now; but it’s basically either manual or postId for now, postId meaning that it is based on a post/page), the behavior (not used yet, but could be to modify the behavior of AI Engine when this embedding is used), the refId (which would be the Post ID if based on a post, but could be anything else), the refChecksum (so that we can check for changes). There is also dbIndex and dbNS which correspond to the Index and Namespace used in Pinecone, which allows to use the same index with many different namespaces, for example.

After this embedding has been created locally in WordPress, the vector (aka the mathematical object) is calculated through OpenAI. Then, it is added in the vector database (Pinecone) with the same ID as in WordPress (in our case here, it’s 42).

When searches are performed, Pinecone returns the matches by their IDs and scores. AI Engine will then take decisions based on those IDs, the context, the scores, the parameters we discussed above, etc.

Common Issues

The embeddings I have created in Pinecone directly aren’t appearing in AI Engine.

You need to create your embeddings within AI Engine. Pinecone is a vector database: it stores vectors, which are mathematical representation of content. However, this is not the content itself, and those vectors cannot be translated back. Sure, you can also store additional metadata in Pinecone and use it for the content or anything else, but that will slow down the retrieval of the data by a lot (especially if your WordPress server and Pinecone server aren’t next to each other). Also, you will hit a lot of other kinds of limitations. In AI Engine, the embeddings are stored in your WordPress database. They are therefore very fast to retrieve, and the requests between WordPress and Pinecone are very fast, and limited to the vectors.

I am using embeddings, but the AI doesn’t seem to use their content. How can I force the AI to use my embeddings?

In short, you can’t, as it’s up to the AI to make that decision. However, there are a few things you should be aware of, so that the content of the embeddings are used the best way possible.

- Be explicit: Clearly state the context you want the AI to focus on. For example, instead of saying “You are an assistant,” say, “You are an assistant specializing in giving advice on healthy eating.”. Having the right context for your chatbot is the most important, and the whole AI behavior will depend on it.

- Be concise: Keep the embeddings brief and focused (and if possible, with an URL at the end so that the user can learn more about it). Longer and more complex context messages might be harder for the AI to understand and utilize effectively.

- Use temperature and max tokens: Lower temperature values (e.g., 0.2-0.5) make the AI more focused and deterministic, while higher values (e.g., 0.8-1.0) make it more creative and varied.

Remember that AI models, including GPT-4, are not perfect and may still occasionally generate responses that don’t fully align with the provided context. You might need to experiment with different approaches to get the desired outcome.

Import / Export

PDF Support

Directly importing a PDF into the AI Engine is not possible (yet) due to the complexity it adds and the high likelihood of issues arising. While it may be feasible for a quick and basic application, creating high-quality embeddings requires a more meticulous approach. This involves transforming the PDF into raw text, dividing it into logical and appropriately sized sections, and then inserting each section as an embedding.

To simplify this process, I recommend you to use an online service to convert the PDF into a workable format such as CSV or spreadsheet. Here are a few free services I recommend: Zamzar, Convertio, or CDKM. After ensuring that everything is well-organized and clean, you can then import it into the AI Engine. As of April 1st 2023, there is no import feature available, so you will have to add each section manually.

Fine-tuning

First, make sure you read my article about How To: Fine-tune or Train an AI model for your WordPress ChatBot. It’s packed with important information.

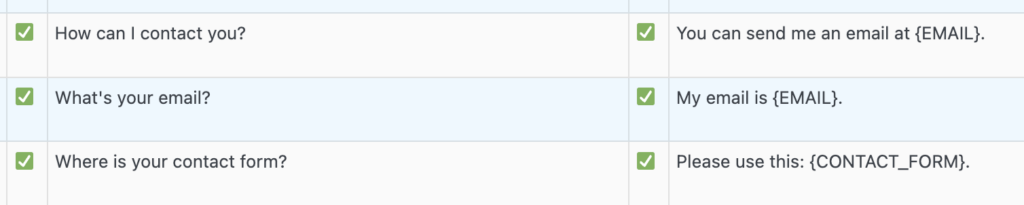

I would like my AI to know about my email, or other dynamic values that might change over time. How can I do this?

You need to train your model to reply with placeholders. For example, in the case of the email, I have personally trained my model with this:

This way, the AI will reply using placeholders. You’ll have to modify the answer dynamically by yourself, but don’t worry, I have everything you need in this section (look for “Can I modify the answer given by the AI?”).

The answers from the AI are completely random, how can I make them better?

This is a difficult question for me; I am not OpenAI, and I have no clue on how they implemented their AI exactly. Not only that, but it also depends highly on the model you use, the training-data, the topics, etc. First, make absolutely sure you are using my Casually Fine Tune method (so that everything was green ticked in the dataset, and that the parameter is enabled for the chatbot).

You can lower the temperature parameter to 0.1, and that will restrict the AI a lot more; and give answers which are much closer to your training data.

Ultimately, the best is to have the most complete dataset possible, and your questions/answers shouldn’t be only about what you do, but about what you don’t do; so in the case the visitor asks a question that is out of scope, you should say so. You need to re-create all the use cases.

The Basics

Tokens

What are Tokens?

Tokens are like pieces of words used by the API to process text input. They can include spaces and sub-words. A general rule is that one token is about 4 English characters or ¾ of a word. A short sentence is about 30 tokens, a paragraph is around 100 tokens, and 1,500 words is approximately 2048 tokens. The number of tokens in a text also depends on the language used.

Here are a few examples of sentences and the number of tokens they have:

- “The quick brown fox jumps over the lazy dog.” – 9 tokens

“The storm was brewing outside… The thunder boomed loudly! The lightning lit up the dark sky! The wind howled fiercely, shaking the windows and doors. The rain poured down in sheets, making it difficult to see outside. It was a wild and dangerous night!” – 32 - “To be or not to be, that is the question.” – 11 tokens

- “L’important n’est pas de gagner, mais de participer.” – 11 tokens

- “風が強く吹いています。” – 7 tokens

- “万物皆有裂痕,那是光进来的地方。” – 15 tokens

The only way to properly calculate the number of tokens is to use the OpenAI Tokenizer.

How are Tokens used in the calculations?

This depends on the specific model being used. In general, the total number of tokens used by a request is the sum of the number of tokens in the prompt and the number of tokens in the completion (i.e., the answer).

Note that in a chatbot, the prompt includes the conversation as well as any relevant context (which can be limited by the sentences_buffer), and may also include additional contexts provided through embeddings or content awareness.

The max_tokens parameter can limit the number of tokens used in the completion, effectively shortening the answer. If you want to ensure shorter answers, you can indicate this in the prompt by specifying a character limit, for example: “Please provide an answer in fewer than 160 characters.”

The prompt is too long! It contains about 667 tokens (estimation). The model turbo only accepts a maximum of 666 tokens. What?

This means that you went beyond the maximum of tokens that can be used. Make sure you have read everything about the basics above.

If you are using the chatbot, you can use the Max Messages, which will reduce the number of messages (between the AI and the user) used to build the prompt. This is the best way to avoid this issue.

Another way is simply to use another model; if you are using the standard Turbo model (limited to 4096 tokens), you can use the 16k version of it, which will enable you to use a prompt 4x larger.

WooCommerce

I would like the chatbot to recommend the customer products from my WooCommerce Store, how can I do this?

The answer isn’t an easy one. I’ll try to keep it easy.

There are two main types of chatbots: those that acts as assistants but are in fact search engines in disguise, and those powered by advanced AI models running on remote servers, such as the ones used by AI Engine (OpenAI and their GPT models).

Search engines chatbots are less sophisticated, run locally, and tend to act in a very robotic manner. They rely on pre-existing information to answer questions and may not provide personalized answers. However, they’ll be able to search through your database and display your products right away. I personally don’t think they are very useful, and I think it’s often better for the customers to browse through a pretty and elegant website that to be confronted with brainless robot 🙂

On the other hand, chatbots powered by AI models are much more powerful, but they only know what they have been taught. For them to know about your content, you’ll need to fine-tune them constantly (check my article about fine-tuning an AI model for WordPress). This is generally unrealistic to do, otherwise there would be already chatbots like that everywhere, in a robot in front of your favorite shop, or online, on websites such as Amazon.

An alternative solution could be for the AI model to create a query based on a discussion with the customers. That query could then be interpreted by a platform like WordPress and used to search for products in WooCommerce. An example would be: “I am looking for a new phone, but I don’t like black phones, and I would have something rather bright, not too obvious. I am super rich by the way!”. Based on this, the AI could be trained to generate something like:

CATEGORY: mobile-phone; COLOR: white, gray; BUDGET: highThis is very easy to parse! In a few lines of code, you are able to run a query on your WooCommerce DB to find matches. This is actually a very common usage of artificial intelligence. Unfortunately, there is no generic solution that fits all. You’ll need to engineer the perfect solution, and implement it.

I’m looking to automate my online business. Can AI handle everything from creating content, managing product links to Amazon, to generating images?

Have you noticed how many YouTubers are now talking about creating websites with AI? It seems like an exciting time. Even the companies providing these AI tools are pushing loads of features to make it look even more attractive. But there’s a twist you should be aware of.

This rush towards AI content generation can lead to something called the Dunning-Kruger effect. This is when people new to a field believe they’re much better at it than they really are. So, while it’s fun to create content with AI, remember that tons of people are doing the same. The internet is getting crowded with similar content. Plus, search engines might soon only show the first source of any piece of information. So, AI-created content might not even get noticed.

It’s great to experiment with new tools, but be cautious. Realize that the true art of content creation involves more than just generating words. It requires creativity, understanding your audience, and a deep knowledge of your subject. AI can help, but it’s not a magic solution. Be aware of its limits and don’t get swept up in the hype. Being unique and original will always be valuable, no matter how advanced AI becomes. So keep learning, keep improving, and stay aware of the big picture.